Understanding the Parquet File Format: A Detailed Guide for You

Are you looking to delve into the world of big data and want to understand the intricacies of the Parquet file format? Look no further! Parquet is a columnar storage file format that is designed to optimize the storage and processing of large datasets. In this article, I will take you through the various dimensions of the Parquet file format, ensuring that you have a comprehensive understanding of its features and benefits.

What is Parquet?

Parquet is an open-source file format that was developed by Twitter and Cloudera. It is designed to store large datasets in a columnar format, which means that data is stored in columns rather than rows. This columnar storage allows for more efficient compression and processing of data, making it an ideal choice for big data applications.

Key Features of Parquet

Here are some of the key features of the Parquet file format:

- Columnar Storage: As mentioned earlier, Parquet stores data in columns, which allows for more efficient compression and processing.

- Schema Evolution: Parquet supports schema evolution, which means that you can add or remove fields from your dataset without having to rewrite the entire file.

- Compression: Parquet supports various compression algorithms, including Snappy, Gzip, and LZ4, which can significantly reduce the size of your files.

- Encoding: Parquet supports multiple encoding schemes, such as RLE, Dictionary, and Bit-packing, which can further optimize the storage and processing of data.

- Read and Write Support: Parquet is supported by many big data processing frameworks, including Apache Hive, Apache Spark, and Apache Hadoop.

How Parquet Works

Parquet files are structured in a hierarchical manner, with each file containing a root node and multiple child nodes. The root node contains metadata about the file, such as the schema and the file size. The child nodes represent the columns in the file, and each column is further divided into multiple blocks.

Here is a simplified representation of a Parquet file structure:

| Node | Content |

|---|---|

| Root | Metadata (schema, file size, etc.) |

| Column 1 | Block 1, Block 2, etc. |

| Column 2 | Block 1, Block 2, etc. |

| … | … |

Benefits of Using Parquet

Using Parquet for your big data applications offers several benefits:

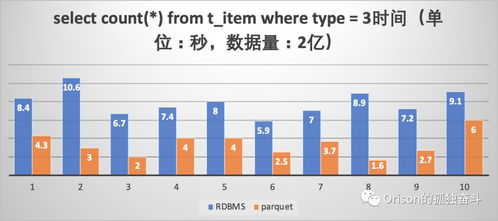

- Improved Performance: The columnar storage and efficient compression algorithms in Parquet can significantly improve the performance of your data processing tasks.

- Scalability: Parquet is designed to handle large datasets, making it an ideal choice for big data applications.

- Interoperability: Parquet is supported by many big data processing frameworks, which means that you can easily integrate it into your existing data processing pipeline.

- Cost-Effective: By reducing the size of your data files, Parquet can help you save on storage costs.

Use Cases of Parquet

Parquet is widely used in various big data applications, including:

- Data Warehousing: Parquet is used to store and process large datasets in data warehouses.

- Data Science: Data scientists use Parquet to store and process large datasets for machine learning and data analysis tasks.

- ETL (Extract, Transform, Load): Parquet is used in ETL processes to efficiently load and transform large datasets.

Conclusion

Parquet is a powerful and versatile file format that is well-suited for big data applications. By understanding its features and benefits, you can make informed decisions about its use in your data processing