Unlocking the Power of Data with Open Databricks File via Python

Are you looking to harness the full potential of your data using Python? If so, you’re in luck! Open Databricks File is a powerful tool that allows you to easily access and manipulate data stored in Databricks. In this article, I’ll guide you through the process of using Open Databricks File via Python, covering everything from installation to advanced techniques. Let’s dive in!

Understanding Open Databricks File

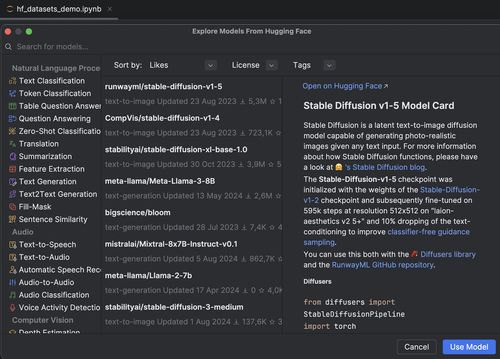

Before we get started, let’s take a moment to understand what Open Databricks File is and why it’s so valuable. Open Databricks File is a Python library that provides a simple and intuitive interface for interacting with Databricks. It allows you to easily read, write, and manipulate data stored in Databricks, making it an essential tool for data scientists and analysts.

One of the key features of Open Databricks File is its support for various data formats, including CSV, JSON, and Parquet. This means you can work with a wide range of data sources, making it a versatile tool for any data-related task.

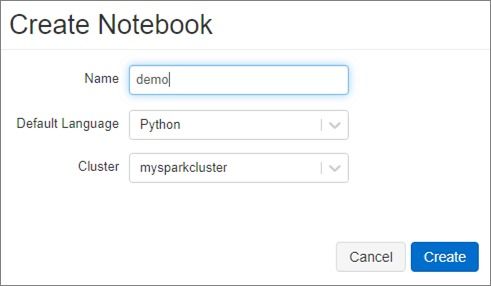

Installation and Setup

Now that we have a basic understanding of Open Databricks File, let’s move on to the installation and setup process. To get started, you’ll need to have Python installed on your system. Once you have Python installed, you can install Open Databricks File using pip:

pip install databricksAfter installing the library, you’ll need to authenticate with your Databricks workspace. This can be done by providing your Databricks token:

from databricks import Clientclient = Client(token='your_databricks_token')Make sure to replace ‘your_databricks_token’ with your actual Databricks token. Once you’ve authenticated, you’re ready to start working with your data.

Reading Data from Databricks

One of the primary use cases for Open Databricks File is reading data from Databricks. You can use the `read_csv`, `read_json`, and `read_parquet` functions to read data from various sources:

df = client.read_csv('path_to_your_file.csv')df = client.read_json('path_to_your_file.json')df = client.read_parquet('path_to_your_file.parquet')These functions return a Pandas DataFrame, which you can then manipulate using standard Pandas operations. For example, you can filter, sort, and aggregate your data as needed:

filtered_df = df[df['column_name'] > 10]sorted_df = df.sort_values(by='column_name')aggregated_df = df.groupby('column_name').sum()Writing Data to Databricks

In addition to reading data from Databricks, Open Databricks File also allows you to write data back to the platform. You can use the `to_csv`, `to_json`, and `to_parquet` functions to write data to various formats:

df.to_csv('path_to_your_file.csv', index=False)df.to_json('path_to_your_file.json', orient='records')df.to_parquet('path_to_your_file.parquet')These functions write the data to the specified path in Databricks. You can also use the `to_sql` function to write data to a database:

df.to_sql('table_name', con=client, if_exists='replace', index=False)Advanced Techniques

Open Databricks File offers a variety of advanced techniques that can help you work with your data more efficiently. Here are a few examples:

1. Data Transformation

Data transformation is a crucial step in the data analysis process. Open Databricks File provides a range of functions that can help you transform your data, such as `apply`, `map`, and `transform`:

df = df.apply(lambda x: x 2)df = df.map(lambda x: x.upper())df = df.transform(lambda x: x + 1)2. Data Aggregation

Data aggregation is another important aspect of data analysis. Open Databricks File offers various functions for aggregating data, such as `groupby`, `sum`, `mean`, and `count`:

aggregated_df =