Understanding Parquet Files: A Detailed Guide for Data Professionals

Parquet files have become a staple in the world of big data, offering a highly efficient and flexible way to store and process large datasets. As a data professional, it’s crucial to have a deep understanding of parquet files to leverage their full potential. In this article, we’ll delve into the intricacies of parquet files, covering their structure, benefits, and practical applications.

What is a Parquet File?

A parquet file is a columnar storage file format designed for use with big data systems like Apache Hadoop and Apache Spark. It was developed to address the limitations of traditional row-oriented file formats, such as CSV and JSON, which are inefficient for processing large datasets.

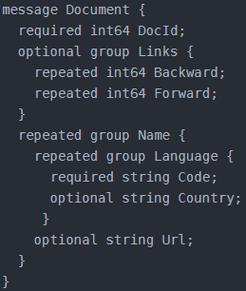

Parquet files store data in a columnar format, which means that each column of data is stored separately. This allows for faster read and write operations, as well as more efficient compression and encoding techniques. Additionally, parquet files support nested data structures, making them suitable for complex data types like arrays and maps.

Structure of a Parquet File

The structure of a parquet file can be broken down into several key components:

- File Header: Contains metadata about the file, such as schema information, compression settings, and encoding details.

- Column Chunk: Represents a chunk of data for a specific column. Each column chunk is divided into smaller units called pages, which can be compressed and encoded independently.

- Page: Represents a unit of data within a column chunk. Pages can be further divided into smaller units called rows, which are stored in a row group.

- Row Group: Represents a collection of rows that share the same schema. Row groups are stored contiguously in the file, which improves read performance.

Here’s a visual representation of the parquet file structure:

| Component | Description |

|---|---|

| File Header | Contains metadata about the file |

| Column Chunk | Represents a chunk of data for a specific column |

| Page | Represents a unit of data within a column chunk |

| Row Group | Represents a collection of rows that share the same schema |

Benefits of Parquet Files

Parquet files offer several benefits over traditional file formats, making them a popular choice for big data applications:

- Efficient Storage: Parquet files store data in a columnar format, which reduces disk space usage and improves read and write performance.

- Compression: Parquet files support various compression algorithms, such as Snappy, Gzip, and LZ4, which can significantly reduce file size and improve I/O performance.

- Encoding: Parquet files use efficient encoding techniques, such as run-length encoding and dictionary encoding, which further optimize storage and processing.

- Schema Evolution: Parquet files support schema evolution, allowing you to modify the schema without losing existing data.

- Read and Write Performance: Parquet files are designed for fast read and write operations, making them ideal for big data processing frameworks like Apache Spark and Apache Hive.

Practical Applications of Parquet Files

Parquet files are widely used in various big data applications, including:

- Data Warehousing: Parquet files are commonly used in data warehousing solutions, such as Apache Hive and Apache Impala, for efficient data storage and querying.

- Data Science: Parquet files are a popular choice for data scientists, as they provide fast access to large datasets and support complex data types.

- Machine Learning: Parquet files are used in machine learning applications, such as Apache Spark MLlib, for efficient data storage and processing.

- ETL (Extract, Transform, Load): Parquet files are often