Using Spark to Read Only Grab JSON Files: A Detailed Guide for You

Are you looking to extract data from JSON files using Apache Spark? If so, you’ve come to the right place. In this article, I’ll walk you through the process of reading and extracting data from JSON files using Spark’s read-only capabilities. Whether you’re a beginner or an experienced data scientist, this guide will provide you with the knowledge and tools you need to successfully work with JSON files in Spark.

Understanding JSON Files

Before diving into Spark, it’s important to have a basic understanding of JSON files. JSON (JavaScript Object Notation) is a lightweight data-interchange format that is easy for humans to read and write and easy for machines to parse and generate. JSON is often used to transmit data between a server and a web application, as it is both human-readable and easily parsed by machines.

JSON files are structured as key-value pairs, with keys and values enclosed in curly braces. For example:

{ "name": "John Doe", "age": 30, "address": { "street": "123 Main St", "city": "Anytown", "state": "CA", "zip": "12345" }, "phone": "555-1234" }

This JSON object contains information about a person, including their name, age, address, and phone number. The address itself is another JSON object, containing its own set of key-value pairs.

Setting Up Apache Spark

Before you can start working with JSON files in Spark, you’ll need to set up the Apache Spark environment. If you haven’t already, download and install Apache Spark from the official website (https://spark.apache.org/downloads.html). Once installed, you’ll need to configure your Spark environment to work with your JSON files.

Open a terminal or command prompt and navigate to the directory where you’ve installed Spark. Then, run the following command to start the Spark shell:

./bin/spark-shell

This will start the Spark shell, which allows you to interact with Spark and execute code.

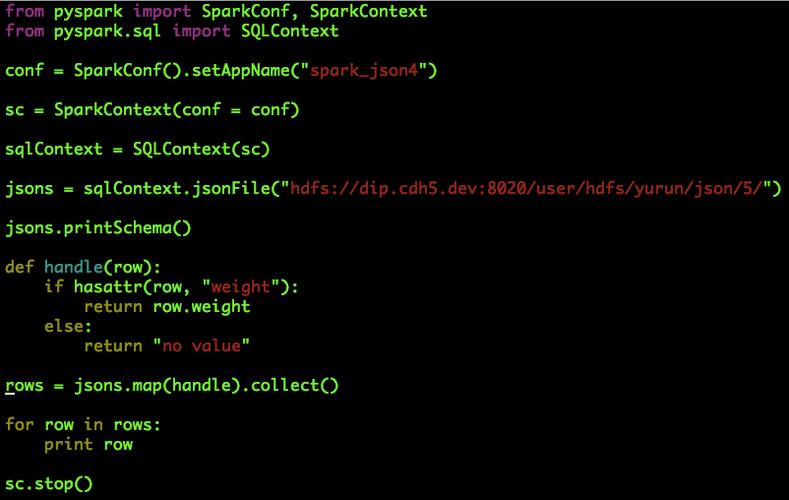

Reading JSON Files with Spark

Now that you have Spark set up, you can start reading JSON files. Spark provides a convenient method called `read.json()` to read JSON files directly into a DataFrame. This method automatically parses the JSON file and converts it into a structured format that can be easily manipulated and analyzed.

Here’s an example of how to read a JSON file using Spark:

val jsonFilePath = "path/to/your/json/file.json" val jsonData = spark.read.json(jsonFilePath)

In this example, `jsonFilePath` is the path to your JSON file. The `read.json()` method reads the file and returns a DataFrame called `jsonData`. You can now perform various operations on this DataFrame, such as filtering, aggregating, and joining data.

Extracting Data from JSON Files

Once you have a DataFrame containing your JSON data, you can easily extract specific information from it. Spark provides a variety of functions and methods to help you manipulate and extract data from your DataFrame.

For example, let’s say you want to extract the name and age of each person in your JSON file. You can use the `select()` method to select the desired columns:

val extractedData = jsonData.select("name", "age")

This will create a new DataFrame called `extractedData` containing only the name and age columns. You can then perform further operations on this DataFrame, such as filtering or sorting the data.

Handling Nested JSON Data

JSON files can contain nested structures, such as the address object in our previous example. Spark provides a way to handle nested JSON data by using the `from_json()` function. This function allows you to extract data from nested JSON objects and convert them into separate columns in your DataFrame.

Here’s an example of how to extract data from a nested JSON object:

val nestedData = jsonData.select( "name", "age", "address.street", "address.city", "address.state", "address.zip" )

In this example, we’re extracting the street, city, state, and zip