square online robots.txt file: A Comprehensive Guide

Understanding the robots.txt file is crucial for anyone managing a website on Square Online. This file plays a pivotal role in dictating how search engines crawl and index your site. In this detailed guide, we will delve into the intricacies of the Square Online robots.txt file, covering its purpose, structure, and best practices.

What is a robots.txt file?

The robots.txt file is a simple text file that resides on your website’s root directory. It serves as a set of instructions for web crawlers, informing them which parts of your site they can and cannot access. By creating and maintaining a robots.txt file, you can control how search engines like Google, Bing, and Yahoo index your website.

Why is the robots.txt file important for Square Online?

Square Online is a popular e-commerce platform that allows businesses to create and manage their online stores. By customizing your robots.txt file, you can optimize your website’s search engine visibility, improve user experience, and protect sensitive information. Here are some key reasons why the robots.txt file is important for Square Online:

-

Control search engine indexing: Prevent search engines from indexing pages that are not ready for public viewing, such as pages under construction or containing sensitive information.

-

Improve website performance: Exclude large, resource-intensive files from crawling, which can help improve your website’s loading speed.

-

Enhance user experience: Redirect search engines to the most relevant pages on your site, ensuring that users find the information they are looking for.

Structure of a robots.txt file

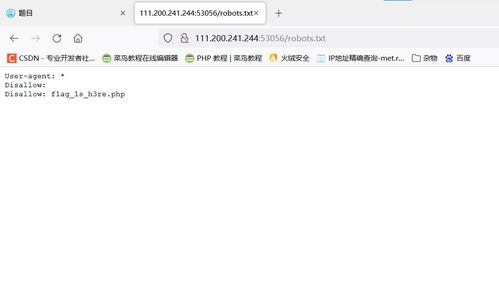

A typical robots.txt file consists of two main components: user-agent lines and disallow lines. Here’s a breakdown of each component:

User-agent lines

User-agent lines specify which web crawlers the instructions in the file apply to. For example, you can create a separate set of instructions for Googlebot, Bingbot, and other search engine crawlers. Here’s an example of a user-agent line:

User-agent: GooglebotDisallow lines

Disallow lines specify which parts of your website should not be accessed by the specified user-agent. You can use wildcards to include or exclude multiple pages. Here’s an example of a disallow line:

Disallow: /admin/This line tells the Googlebot crawler not to access any pages within the “/admin/” directory.

Best practices for creating a robots.txt file on Square Online

Creating an effective robots.txt file requires careful consideration of your website’s structure and content. Here are some best practices to keep in mind:

-

Start with the User-agent: line: This line applies to all web crawlers by default.

-

Use wildcards sparingly: Wildcards can be powerful, but they can also lead to unintended consequences. Use them only when necessary.

- Keep the file simple and easy to read: Avoid overly complex rules that can confuse search engines.

-

Test your robots.txt file: Use online tools like the Google Search Console to test your robots.txt file and ensure it’s working as intended.

Example of a robots.txt file for Square Online

Here’s an example of a robots.txt file for a Square Online website:

User-agent: Disallow: /admin/Disallow: /checkout/Disallow: /cart/Disallow: /orders/Disallow: /account/Disallow: /password/Disallow: /login/Disallow: /register/Disallow: /logout/Disallow: /wishlist/Disallow: /search/Disallow: /tags/Disallow: /collections/Disallow: /products/Disallow: /collections//.jsonDisallow: /collections///.jsonDisallow: /collections////.jsonDisallow: /collections/////.jsonDisallow: /collections//////.jsonDisallow: /collections///////.jsonDisallow: /collections////////.jsonDisallow: /collections/////////.jsonDisallow: /collections//////////.jsonDisallow: /collections///////////.jsonDisallow: /collections////////////.jsonDisallow: