Using PySpark to Validate JSON File Structure When Changes Occur

As data grows and evolves, ensuring the integrity and consistency of your JSON files becomes increasingly important. One common challenge is dealing with changes in the file structure. This article will guide you through the process of using PySpark to validate JSON files when their structure changes. We’ll delve into the details, providing you with a comprehensive understanding of how to implement this in your data workflows.

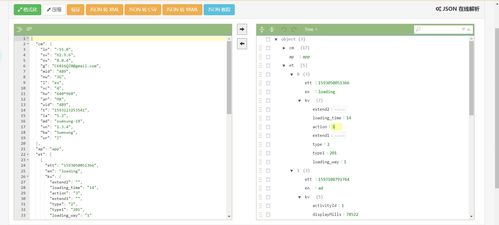

Understanding JSON Structure Changes

Before we dive into the technical aspects, it’s crucial to understand what constitutes a change in JSON structure. This could include the addition or removal of fields, changes in the order of fields, or even changes in the data types of certain fields. Recognizing these changes is the first step in validating your JSON files.

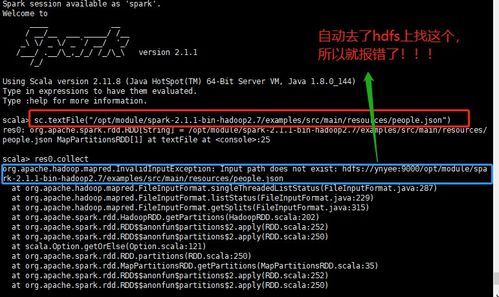

Setting Up Your PySpark Environment

Before you can start validating JSON files, you need to set up your PySpark environment. Ensure you have PySpark installed and configured correctly. You can download PySpark from the official Apache Spark website and follow the installation instructions for your specific operating system.

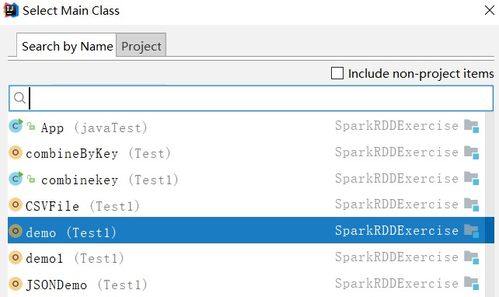

Once installed, you can start a PySpark session by running the following code:

from pyspark.sql import SparkSessionspark = SparkSession.builder.appName("JSONValidation").getOrCreate()Reading and Parsing JSON Files

With your PySpark environment set up, the next step is to read and parse your JSON files. PySpark provides a convenient method called `read.json()` to read JSON files directly into a DataFrame. This method automatically infers the schema of the JSON file, which is essential for validation.

Here’s an example of how to read a JSON file:

df = spark.read.json("path_to_your_json_file.json")Defining the Expected Schema

Once you have your JSON file loaded into a DataFrame, you need to define the expected schema. This schema should reflect the current structure of your JSON files. You can define the schema using PySpark’s DataFrame API.

For example, if your JSON file has the following structure:

[ { "name": "John Doe", "age": 30, "email": "[email protected]" }, { "name": "Jane Smith", "age": 25, "email": "[email protected]" } ]You can define the schema as follows:

from pyspark.sql.types import StructType, StructField, StringType, IntegerTypeschema = StructType([ StructField("name", StringType(), True), StructField("age", IntegerType(), True), StructField("email", StringType(), True)])Validating the JSON File Structure

Now that you have both the JSON file and the expected schema, you can validate the structure of the JSON file. PySpark provides a method called `validateSchema()` that checks if the DataFrame conforms to the specified schema.

Here’s an example of how to validate the JSON file structure:

df.validateSchema(schema)This method will raise an exception if the structure of the JSON file does not match the expected schema. You can catch this exception and handle it accordingly.

Handling Schema Changes

As your data evolves, it’s likely that your JSON file structure will change. To handle these changes, you can update your expected schema and re-run the validation process. This ensures that your validation remains up-to-date with the current structure of your JSON files.

Conclusion

Validating JSON file structure is an essential part of maintaining data integrity and consistency. By using PySpark, you can easily read, parse, and validate JSON files, ensuring that your data remains accurate and reliable. This article has provided you with a comprehensive guide on how to implement this process in your data workflows.

| Step | Description |

|---|---|

| 1 | Set up your PySpark environment. |