Calculating MD5 Checksum on a File with PySpark: A Detailed Guide

Understanding the importance of file integrity is crucial in today’s digital landscape. One of the most common methods to ensure file integrity is by calculating the MD5 checksum. In a distributed computing environment like Apache Spark, this task can be efficiently handled using PySpark. This article will delve into the intricacies of calculating MD5 checksums on files using PySpark, providing you with a comprehensive guide to achieve this task.

Understanding MD5 Checksum

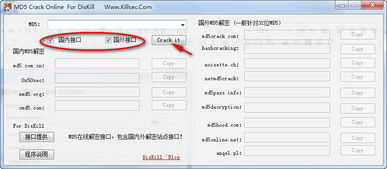

Before diving into the PySpark implementation, it’s essential to understand what an MD5 checksum is. MD5 (Message-Digest Algorithm 5) is a widely-used cryptographic hash function that produces a 128-bit (16-byte) hash value from an input (or “message”). The hash value is typically a hexadecimal number. The primary purpose of an MD5 checksum is to verify the integrity of a file by comparing the checksum of the original file with the checksum of the file after it has been downloaded or modified.

MD5 is commonly used for checking the integrity of files after a download, ensuring that the file has not been corrupted or tampered with during the transfer. However, it’s important to note that MD5 is not considered secure for cryptographic purposes due to vulnerabilities that can be exploited to create collisions (two different inputs producing the same hash value).

Setting Up PySpark Environment

Before you can start calculating MD5 checksums on files using PySpark, you need to set up your PySpark environment. Here’s a step-by-step guide to help you get started:

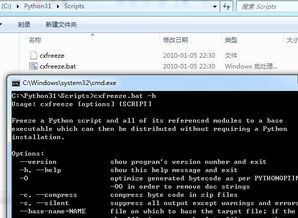

- Install PySpark: You can install PySpark by downloading it from the official Apache Spark website and following the installation instructions for your specific operating system.

- Set up a Spark session: Once PySpark is installed, you can set up a Spark session by importing the necessary modules and creating a session object. Here’s an example:

from pyspark.sql import SparkSessionspark = SparkSession.builder .appName("MD5 Checksum Calculator") .getOrCreate()Now that you have a Spark session, you can proceed to calculate the MD5 checksum of your files.

Reading Files into PySpark

Before calculating the MD5 checksum, you need to read the files into PySpark. You can do this by using the Spark session’s `read` method, which allows you to read files from various data sources such as HDFS, local file system, and cloud storage. Here’s an example of reading a file from the local file system:

df = spark.read.text("path/to/your/file.txt")In this example, `path/to/your/file.txt` is the path to the file you want to read. The `read.text` method reads the file into a DataFrame, where each row represents a line in the file.

Calculating MD5 Checksum with PySpark

Now that you have the file content in a DataFrame, you can calculate the MD5 checksum using the `hashlib` module in Python. Here’s an example of how to calculate the MD5 checksum of a file using PySpark:

import hashlibdef calculate_md5_checksum(line): md5_hash = hashlib.md5() md5_hash.update(line.encode('utf-8')) return md5_hash.hexdigest()checksum_df = df.rdd.map(lambda line: calculate_md5_checksum(line)).collect()In this example, the `calculate_md5_checksum` function takes a line of text as input, calculates the MD5 checksum using the `hashlib` module, and returns the hexadecimal representation of the checksum. The `rdd.map` method applies this function to each row in the DataFrame, and the `collect` method collects the results into a list.

Here’s a table summarizing the steps involved in calculating the MD5 checksum using PySpark:

| Step | Description |

|---|---|

| 1 | Set up a Spark session |

| 2 | Read the file into a DataFrame |

| 3 | Define a function to calculate the MD5 checksum |