Using urllib.request to Download Files to Memory: A Detailed Guide

Have you ever wanted to download a file directly to your memory without saving it to your disk? If so, you’re in luck! The Python library urllib.request provides a convenient way to achieve this. In this article, I’ll walk you through the process of downloading files to memory using urllib.request, covering various aspects such as file handling, error handling, and performance optimization.

Understanding urllib.request

Before diving into the details, let’s briefly discuss what urllib.request is. urllib is a Python library that provides various functions for working with URLs. The request module, specifically, allows you to send HTTP requests and retrieve responses. It’s a powerful tool for web scraping, data retrieval, and more.

Setting Up Your Environment

Before you start, make sure you have Python installed on your system. You can download and install Python from the official website (https://www.python.org/). Once Python is installed, you can proceed with the following steps:

- Open your favorite text editor or IDE.

- Import the necessary modules:

import urllib.requestimport ioDownloading a File to Memory

Now that you have the required modules, let’s see how to download a file to memory. The process is quite straightforward. Here’s a step-by-step guide:

- Obtain the URL of the file you want to download.

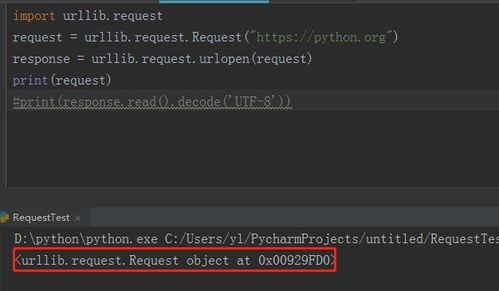

- Use the `urllib.request.urlopen()` function to open the URL and retrieve the response object.

- Read the response object using the `read()` method to obtain the file content.

- Store the file content in a variable or write it to a file.

Here’s an example code snippet that demonstrates the process:

url = 'https://example.com/file.zip'response = urllib.request.urlopen(url)file_content = response.read() Store the file content in a variable You can also write it to a file using the following code: with open('file.zip', 'wb') as f: f.write(file_content)Error Handling

When downloading files from the internet, it’s essential to handle potential errors that may occur. The urllib.request module provides several functions to help you with this. Here are some common errors and how to handle them:

- HTTPError: This error occurs when the server returns an HTTP error code (e.g., 404, 500). You can catch this error using a try-except block and handle it accordingly.

- URLError: This error occurs when there’s an issue with the network connection or the URL itself. Similar to HTTPError, you can catch this error using a try-except block.

Here’s an example code snippet that demonstrates error handling:

try: url = 'https://example.com/file.zip' response = urllib.request.urlopen(url) file_content = response.read()except urllib.error.HTTPError as e: print(f'HTTP Error: {e.code} - {e.reason}')except urllib.error.URLError as e: print(f'URL Error: {e.reason}')Performance Optimization

When downloading large files, it’s essential to optimize the process to minimize memory usage and improve performance. Here are some tips to help you achieve this:

- Use a streaming approach: Instead of reading the entire file content at once, read it in chunks. This approach reduces memory usage and allows you to process the file as it’s being downloaded.

- Use a timeout: Set a timeout value for the request to avoid waiting indefinitely for a response. This can be useful when dealing with slow or unresponsive servers.

Here’s an example code snippet that demonstrates a streaming approach:

url = 'https://example.com/file.zip'response = urllib.request.urlopen(url) Read the file in chunkschunk_size = 1024file_content = b''while True: chunk = response.read(chunk_size) if not chunk: break file_content += chunk Process the file content as neededConclusion

Downloading files to memory using urllib.request is a straightforward process. By following the steps outlined in this article