Remove Duplicates from Known Hosts File: A Comprehensive Guide

Managing your known hosts file is an essential task for any system administrator or power user. The known hosts file, located at /etc/hosts on Unix-like systems, is a critical component that maps hostnames to IP addresses. Over time, this file can accumulate duplicates, which can lead to confusion and errors. In this guide, I’ll walk you through the process of identifying and removing duplicates from your known hosts file, ensuring your system remains efficient and error-free.

Understanding the Known Hosts File

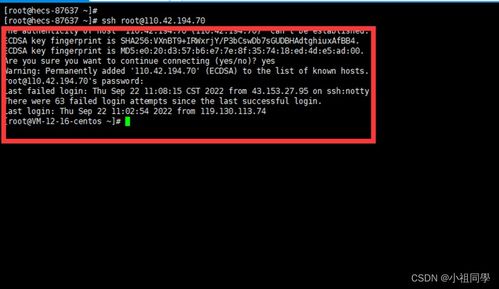

The known hosts file is used by SSH and other applications to resolve hostnames to IP addresses without relying on DNS. This is particularly useful in environments where DNS resolution is unreliable or slow. Each line in the file contains a hostname, an IP address, and an optional alias, separated by whitespace.

Here’s an example of what a typical known hosts file might look like:

127.0.0.1 localhost::1 localhost ip6-localhost ip6-loopbackfe00::0 ip6-localnetff00::0 ip6-mcastprefixff02::1 ip6-allnodesff02::2 ip6-allrouters192.168.1.1 server1192.168.1.2 server2192.168.1.3 server3

Identifying Duplicates

Identifying duplicates in the known hosts file can be challenging, especially if the file is large. However, there are several methods you can use to find them:

- Manual Inspection: This is the most time-consuming method but can be effective for small files. Simply open the file in a text editor and look for duplicate lines.

- Command Line Tools: Tools like

grepandawkcan be used to search for duplicate lines. For example, the following command will find duplicate IP addresses:

grep -Fxf /path/to/known_hosts /path/to/known_hosts | sort | uniq -d

- Scripting: Writing a script to automate the process can save time, especially if you have multiple known hosts files to check. Below is a simple Python script that identifies duplicates in a known hosts file:

remove_duplicates.pydef find_duplicates(file_path): with open(file_path, 'r') as file: lines = file.readlines() unique_lines = set() duplicates = [] for line in lines: if line.strip() in unique_lines: duplicates.append(line.strip()) else: unique_lines.add(line.strip()) return duplicatesif __name__ == '__main__': file_path = '/etc/hosts' duplicates = find_duplicates(file_path) for duplicate in duplicates: print(duplicate)

Removing Duplicates

Once you’ve identified the duplicates, you can remove them using a text editor or command line tools. Here are some options:

- Text Editor: Open the known hosts file in a text editor and delete the duplicate lines. Save the file and exit.

- Command Line Tools: You can use the

sedcommand to remove duplicate lines from the known hosts file:

sed '/^.$/N; s/([^])1/1/p' /etc/hosts > /etc/hosts.tmp && mv /etc/hosts.tmp /etc/hosts

Preventing Future Duplicates

Preventing duplicates from appearing in the known hosts file is just as important as removing them. Here are some tips to help you avoid duplicates:

- Use a Centralized Repository: Store your known hosts file in a centralized repository and ensure that all changes are made through a version control system.

- Automate the Process: Use scripts or tools to manage your known hosts file, ensuring that changes are made consistently and accurately.

- Regularly Review the File: Periodically review the known hosts file for duplicates and other errors.

Conclusion

Removing duplicates from your known hosts file is an important task that can help ensure your system’s reliability and performance. By understanding the file’s structure, identifying duplicates, and